Augmented reality based surgical navigation using head-mounted displays

BY MOHAMED BENMAHDJOUB

In the past decades, surgical navigation has proved to be of important assistance to surgeons. The technology allows the localization of instruments with respect to preoperatively acquired images (e.g., CT, MRI). It also helps with visualizing preoperative planning to avoid critical structures or to reach a specific target during the operation. A crucial but time-consuming step is the image-to-patient registration, which aligns the preoperative image with the real patient. In an augmented reality (AR) navigation, the AR device sensors may aid in automatic detection of landmarks, and thus help in a more automated alignment of the patient-to-image.

This article is based on Fiducial markers detection trained exclusively on synthetic data for image-to-patient alignment in HMD-based surgical navigation, by M. Benmahdjoub, A. Thabit, W. J. Niessen, E. B. Wolvius, and T. Van Walsum, presented at the 2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Sydney, Australia, 2023, pp. 429-434.

The project n◦ AOCFMS-23-06M was supported and funded by AO Foundation, AO CMF.

Automated image-to-patient registration in AR with deep learning models

Conventionally, image-to-patient registration is a manual process where the surgeon uses a pointer to locate landmarks (anatomical/artificial) on the patient, that have also been annotated on the diagnostic preoperative image. Augmented reality has been suggested as a potential solution to these issues and has been linked to many craniomaxillofacial surgeries. In augmented reality (AR) navigation, the AR device sensors may aid in automatic detection of landmarks, and thus it may also help in a more automated alignment of the patient to image in AR-based navigation.

The automated detection of artificial markers (such as sticked to patient) is an important step in facilitating this alignment, and if the AR system is linked to a conventional navigation system, it enables automation of the image-to-patient registration procedure. Such automated approaches may also permit continuous quality check of the patient-to-image alignment in navigation. Whereas deep learning-based methods likely are a suitable approach to such automated detection of markers, the training of models is hampered by the lack of real data (pictures of patients). It is our hypothesis that such models may also be trainable by synthetic images, generated from photo-realistic rendering engines. Demonstrating that this indeed is possible opens the way for novel AI approaches in surgery, where real patient data for training is lacking.

Surgical navigation guides provide surgeons with spatial insights on where the anatomy and surgical instruments are in the patient space during interventions. Image-to-patient alignment is an important step which enables the visualization of preoperative images directly overlayed on the patient. Conventionally, image-to-patient alignment can be done with surface or point-based registration using anatomical or artificial landmarks. The point-based registration where surgeons use a trackable pointer to pinpoint some landmarks on the patient, i.e. fiducial markers placed preoperatively, and match them with their counterparts in the preoperative image can provide a solution.

This method, although accurate, can be cumbersome and is currently time-consuming. Direct detection of these landmarks in video may speed up the registration process, making it a first step towards AR navigation using head-mounted displays.

Image-to-patient registration challenges in surgical navigation

Practitioners and surgeons rely on medical images to get more insights into a patient’s condition. For instance, these images in craniomaxillofacial surgery can be used for surgical planning. To execute the surgical plan, 3D surgical guides have been used to restrain the surgeon’s surgical instruments to a preplanned trajectory (drill) or a cutting plane (saw), enabling accurate execution of the preplanned procedure[1].

When surgical plans cannot be used, conventional navigation systems may be an alternative. Such systems visualize instrument position in relation to the anatomy on a screen nearby the surgical area. Conventional navigation systems require an image-to-patient registration step, which links the acquired patient’s image (e.g., CT) to the physical anatomy of the patient. This registration step consists of matching anatomical and artificial landmarks on the patient, pinpointed with a tracked pointer, with the corresponding positions in the medical images (e.g., CT) [2].

An accurate image-to-patient registration is a prerequisite for an accurate execution of the surgical plan. However, conventional navigation systems visualize the information in 2D. Furthermore, the orientation of the visualization of the patient data on the 2D monitor might not match that of the surgeon, leading to difficulties in hand-eye coordination. With the addition of the continuous switch of attention between the screen and the surgical area, the use of conventional navigation systems may raise the cognitive load making these systems less intuitive.

Extended reality on the rise in medicine

Extended reality (XR) represents the spectrum of technologies that can bring virtual objects into reality, ranging from Virtual reality to augmented reality, see Fig. 1. In virtual reality (VR) users are fully immersed in a virtual environment with no access to the real world. In contrast, augmented reality (AR) allows to visualize virtual elements while keeping access to the real world.

The number of AR/VR studies in medicine has been rising in the last decade (see Fig. 2). The range of applications of this technology goes from training, learning, mental therapy, and preoperative planning to surgical navigation. In the next sections, the focus will be the use of AR for surgical navigation.

Using augmented reality in surgical navigation

AR-based approaches to surgical navigation have been proposed in the literature to address the limitations and challenges of using conventional navigation systems. A main technological challenge of AR in surgical navigation is image-to-patient registration: in other terms, estimating the target anatomy’s location with respect to the AR device.

The image-to-patient registration problem when using HMDs can be solved in two manners:

- Outside-in approach, where an external tracking system such as optical/electromagnetic tracking system in conventional navigation systems (see Fig. 3) is used for tracking the HMD, the instruments, and the anatomy.

- Inside-out approach where the HMD is responsible for these same tasks by means of built-in sensors (e.g., RGB camera, grayscale cameras, depth cameras present in the HoloLens 2) which help the HMD keep track of its location in the 3D environment.

- A hybrid approach where both outside-in and inside-out tracking are required[4].

In general, inside-out tracking is less accurate than outside-in approaches. However, the simplicity of an HMD only navigation system can be a major benefit inside the OR.

Image-to-patient alignment using the HoloLens 2 concept

In our study we use artificial surgical landmarks for image-to-patient registration. By automatically detecting these artificial surgical landmarks (see Fig. 4) on the patient, and annotating these in the image, we can align the imaging data with the patient anatomy.

We utilize HoloLens 2 to digitize the centers of the artificial landmarks. To this end, we needed to automatize landmark localization using the RGB camera of the HoloLens.[5]. Fig. 5 summarizes the workflow of the solution.

Deep learning landmark detection

Since the shape and color (transparency) of the landmarks are not easily detectable by traditional computer vision algorithms, we opted for deep learning object detection models (in our case Yolov5). Object detection models require a large amount of training data. In our case, the data would be 2D RGB images and their annotations which consist of the location of every landmark’s center on the images.

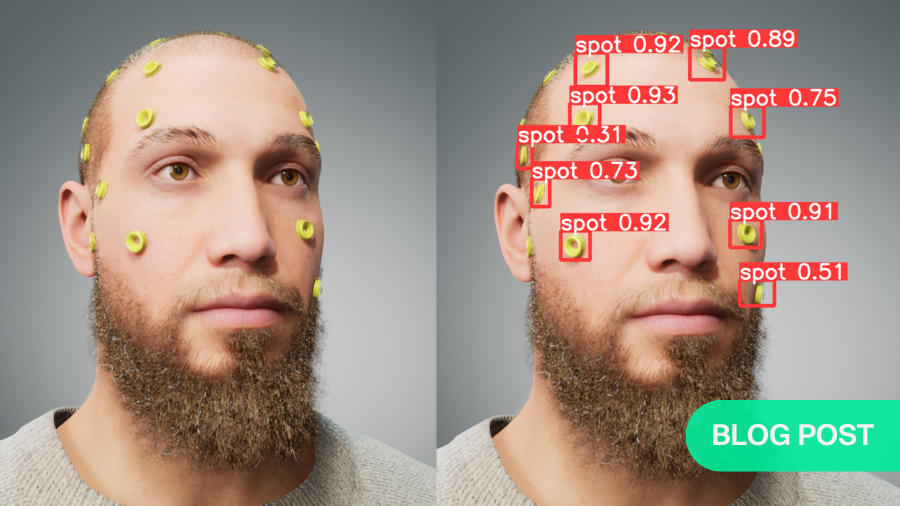

Since this type data was unavailable, we decided to generate synthetic data that mimic a real scenario where registration landmarks are attached on the head of a patient. For this, we used Unreal Engine and MetaHuman[6] to generate photorealistic images of humans with attached registration landmarks (See Fig. 6) and the positions of the landmarks’ centers for every image.

The object detection model was trained exclusively on the generated data to locate the landmarks, and then was tested on video images coming from HoloLens 2 (see Fig. 6, C-D).

The model trained on synthetic data achieved a mean average precision of 99% on the RGB generated test images, with a distance 0.9 pixel mean distance error to localize the centers of the landmarks.

The same model, without finetuning for real images (from HL2 camera), was able to detect the landmarks with a precision of 94% and 3.8 pixels mean distance error of localizing the centers of the landmarks.

Object detection models trained on synthetic data for surgical navigation

The experiment showed the feasibility of using object detection models to tackle the first step to image-to-patient registration in a surgical navigation context. It was interesting that the model trained on synthetic data, without any fine tuning on real data, was able to detect surgical landmarks on the images coming from the HL2.

The workflow can be improved in all the steps: in the generation, training, and registration. The generation simulated the real scenario as much as possible, but that does not mean that this exact simulation is needed to obtain satisfactory results: generating images where only the landmarks are visible could provide the same results.

It is important to mention that this study focused on the three first steps of the proposed workflow for image-to-patient registration. The future work will investigate how to improve the detection rate and accuracy and the possibility of using the detected landmarks to perform the registration step.

We showed an approach where synthetic data for object detection models was used to detect landmarks in a HoloLens 2 video feed which can be used for image-to-patient registration. This approach may facilitate seamless registration of imaging data to the patient for surgeons wearing an HMD as a surgical navigation system.

About the author:

Mohamed Benmahdjoub is a postdoctoral researcher at the Biomedical Imaging Group Rotterdam (BIGR) and the department of oral and maxillofacial surgery at Erasmus MC hospital, Rotterdam, The Netherlands.

The focus of his research is surgical navigation using augmented reality, more specifically image-to-patient registration, visualization, and 3D perception using head-mounted displays.

References and further reading:

- T. Ebker, P. Korn, M. Heiland, and A. Bumann, “Comprehensive virtual orthognathic planning concept in surgery-first patients,” British Journal of Oral and Maxillofacial Surgery, vol. 60, no. 8, pp. 1092–1096, Oct. 2022. doi: 10.1016/J.BJOMS.2022.04.008.

- S. H. Kang, M. K. Kim, J. H. Kim, H. K. Park, S. H. Lee, and W. Park. The validity of marker registration for an optimal integration method in mandibular navigation surgery. Journal of Oral and Maxillofacial Surgery, 71:366–375, 2 2013. doi: 10.1016/J.JOMS.2012.03.037

- M. Benmahdjoub, et al., "Evaluation of AR visualization approaches for catheter insertion into the ventricle cavity" in IEEE Transactions on Visualization & Computer Graphics, vol. 29, no. 05, pp. 2434-2445, 2023. doi: 10.1109/TVCG.2023.3247042

- Benmahdjoub, M., Niessen, W.J., Wolvius, E.B. et al. Multimodal markers for technology-independent integration of augmented reality devices and surgical navigation systems. Virtual Reality 26, 1637–1650 (2022). https://doi.org/10.1007/s10055-022-00653-3

- Lepetit, V., Moreno-Noguer, F. & Fua, P. EPnP: An Accurate O(n) Solution to the PnP Problem. Int J Comput Vis 81, 155–166 (2009). https://doi.org/10.1007/s11263-008-0152-6

- https://www.unrealengine.com/en-US/metahuman

You might also be interested in:

Research Training and Education

AO supports surgeons in getting up-to-speed in every aspect of their research through online resources, courses, and sponsored fellowships.

AO CMF research grants

Our research aims to offer new treatment methods that will make a real difference to patients worldwide. Past and present AO CMF funded Research Projects.

Publish your research

Submit your original research work to the official scientific publication of AO CMF, the Craniomaxillofacial Trauma & Reconstruction (CMTR).